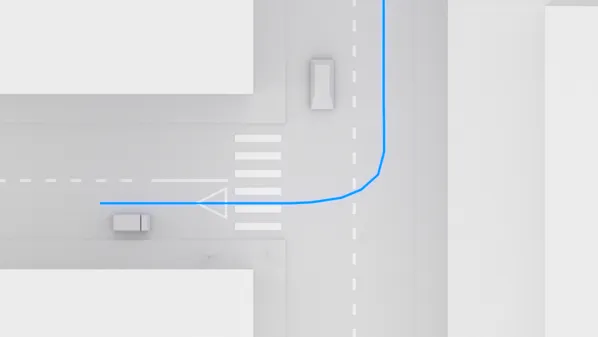

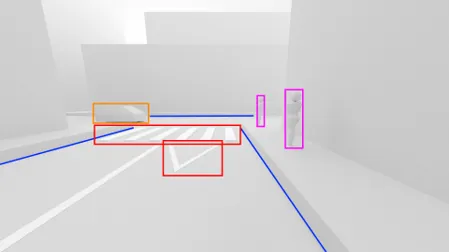

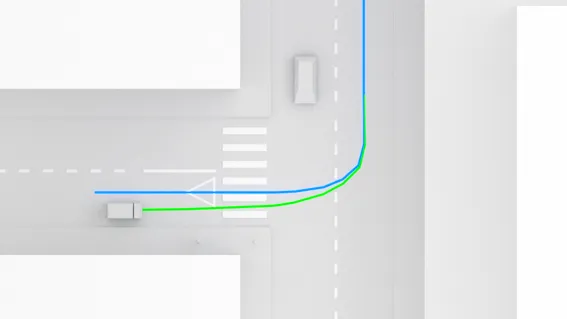

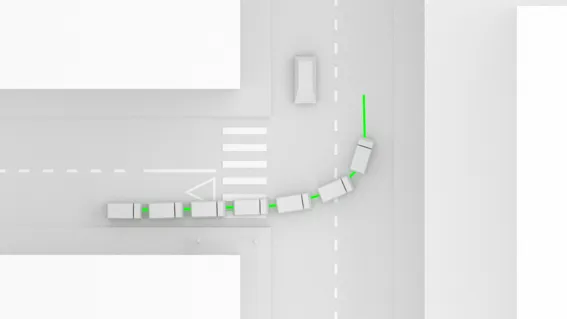

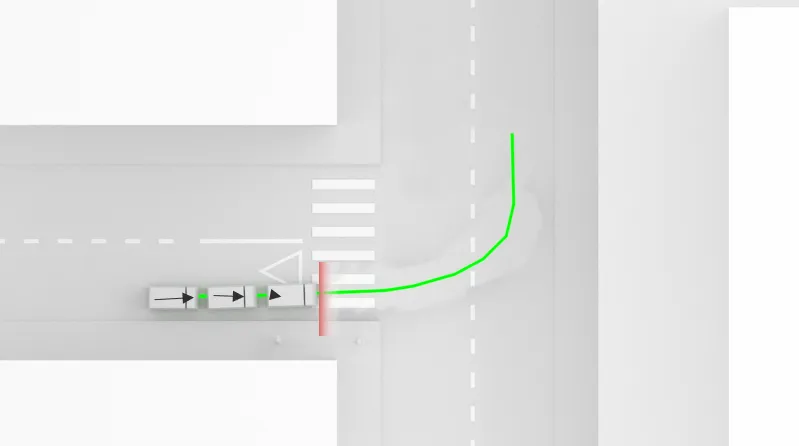

Mapping & Routing

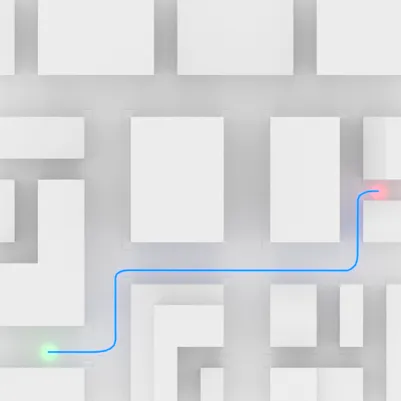

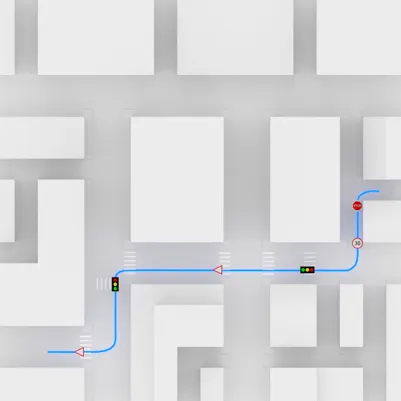

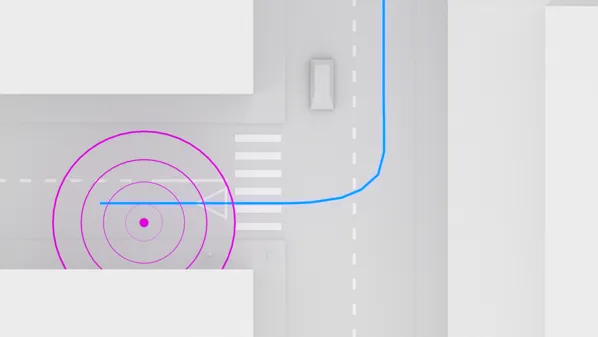

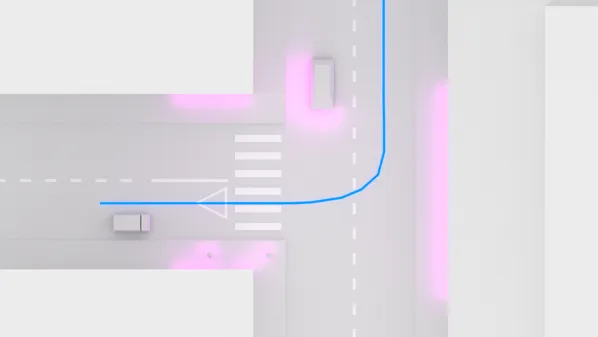

Utilizing our teleoperation technology, we collect data and premap cities before deploying our Autonomous Driving mode. Then, coupling our recorded data with public API data, our routing algorithm finds the most optimal path and starts its journey.